Microsoft Exchange Server 2013 DAG : Failed to notify source server about the local truncation point

I was recently tasked with a project that included migrating an Exchange Server 2010 environment to an Exchange Server 2013 one, fast forward everything was migrated to a single server the second server was setup and configured properly as well and it was time to add the copies of the mailbox databases.

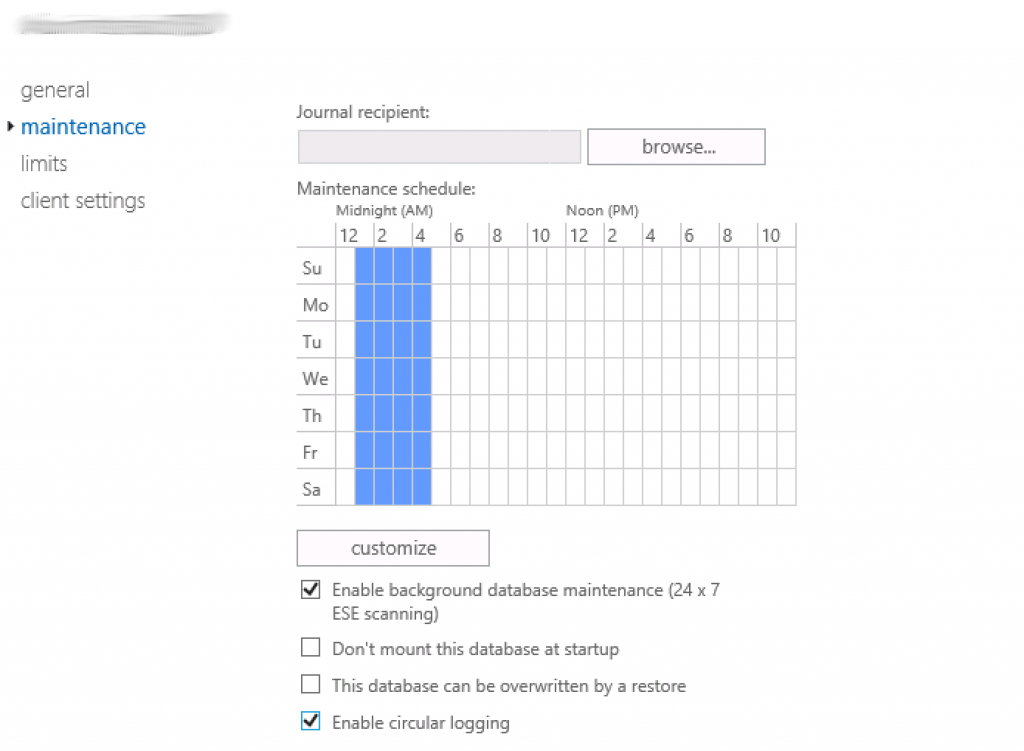

The customer had a requirement to have circular logging enabled, but before adding a mailbox database copy circular logging must be disabled.

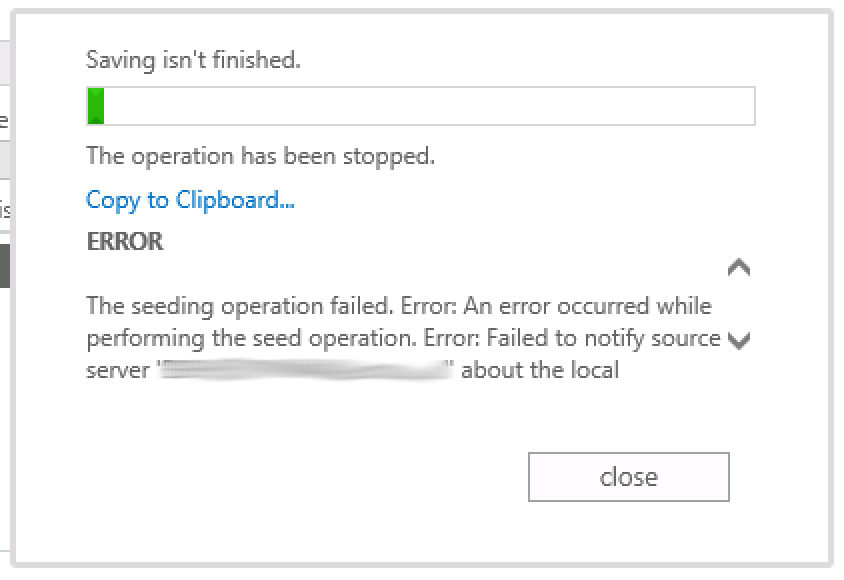

While attempting to add one of the databases I got the following error:

The seeding operation failed. Error: An error occurred while performing the seed operation. Error: Failed to notify source server ‘ExchangeServer FQDN’ about the local truncation point. Hresult: 0xc8000713. Error: Unable to find the file. [Database: DATABASE, Server: ExchangeServer FQDN]

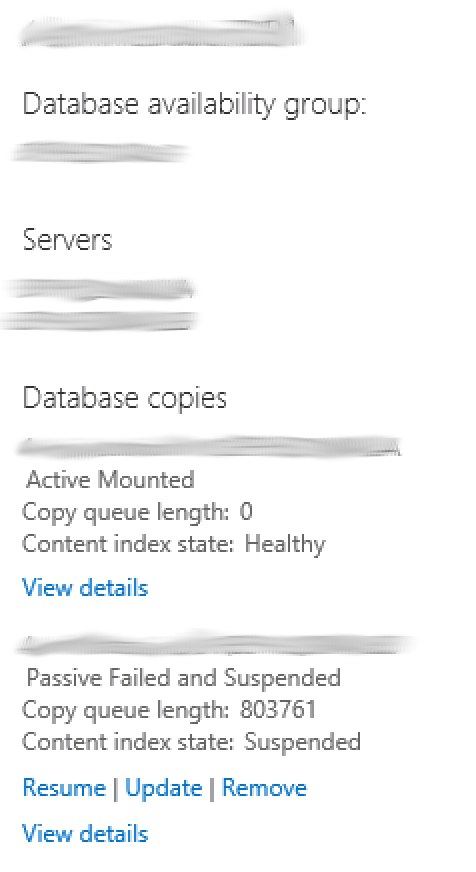

And the status of the database copy is failed and suspended

I tried adding another database thinking that this might be related to this single database and the results were the same. I did a quick research on the subject and most of the responses were that you should:

- Dismount the database.

- Run eseutil with the /mh parameters (more info here).

I had a lot of databases and dismounting the databases to run the utility was merely impossible even on a weekend, on the other hand as I was continuing my research I found a thread on Technet which mentions a long side to running the eseutil “moving the logs” to another directory so that they will get recreated and here it hit me!

What if I re-enable circular logging then try to update the database! Because since circular logging was enabled I am already missing on a lot of the transactions that were initially written on the database!

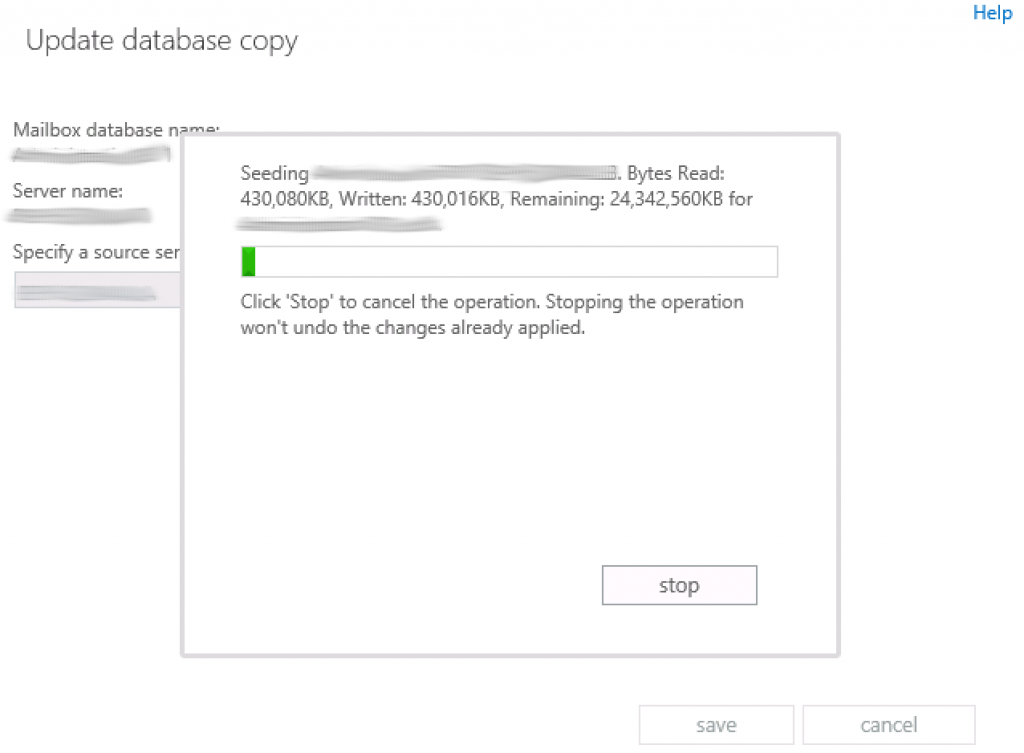

- I tried first hitting the update option but sadly I got the same error

- I tried hitting resume option which resulted again in the failed and suspended status then hit the update option again.

- AND IT WORKED :-D

I was glad that I did not have to go through the eseutil havoc as it would have bumped the project at least 1 week (going through all databases that is), I hope this helps!

(Abdullah)^2

Greats,, I was with this same problem,, I did the same thing and it working.

Tks

Most welcome, glad this helped out :).

Thank you !

Most welcome :).

how long before you tried to reseed, after turning on circular logging? was it straight away or next day?

Hello, directly as I recall.

Hello, you are a life saver…i have been sitting here pulling my ears till i stumbled on you post.

It worked for me aswell

Thank you :).

Sweet!! I’m fairly new to Exchange back-end stuff and ran into this about an hour ago. I feared I would be spending hours and hours troubleshooting, but your page came up 3rd in my search and took me right to a solution. Thanks for sharing, you saved me a ton of time!!

Awesome, glad I could help :).

Tnx, it works:

When second copy where in failed state, enable Circular logging – no dismount needed, because exchange thinks, that there is a copy already.

Then Resume.

Then Update.

I can’t believe this worked. Thanks so much for posting!!

Glad it helped :).

Worked like a charm, but had to disable the DAG network adapters first

thankyou, worked for me.

Glad to know :).

Thank You Very Much.

You are life saver hero

thank you very much @Abudallah,

also followed the steps you shared and it worked perfectly well on exchange 2016

Glad that I could help :).

I have the same problem on exchange 2019. I have read many posts with very complex scenarios. The following steps work perfectly. I can’t believe it: UPDATE, RESUME, UPDATE and it starts copying. Thank you very much

Thanks man! Super random solution. Fixed it for me as well.

Had the same issue on Exchange 2013, and this worked perfectly, Thanks.. You’re a legend

You are a life saver!