NSX-T Unified Appliance Clustering

I’ve been quite busy recently (a lot going on with work, studies, certifications and obviously family), apologies for the cut-off from blogging a bit, hopefully will catch up with what I’ve learned in the past months.

Since the release of NSX-T, I honestly couldn’t start with it as back then I was preparing for my VCDX-NV and mixing up my information would have been a bad move. With the release of 2.4 and now that NSX-T is actually in a state of feature parity with NSX-v, this means a lot of migrations to come and a lot of deployments to cover the use cases that we used to handle with NSX-v and so much more.

The teams working on NSX-T are just doing an awesome job at it, across all units, I believe 2.4 was baked with lots of love and attention to the world.

One of the features that caught my attention was the ability to cluster the NSX-T unified appliance, which means now you have the ability to cluster:

- The NSX Manager role.

- The NSX Policy role.

- The NSX Controller role.

- In addition to the back-end database where the data is written (the Corfu database or what will be recognized as the datastore).

Further more to clustering the roles I mentioned earlier, we now have the ability to load balance the UI and API access through an internally configured VIP.

After deploying the first appliance, and before commencing with any configuration, I believe it would be a good move to cluster the appliances immediately, with the following taken into consideration:

- The appliances are preferable to be deployed on separate data stores.

- When the appliances are clustered, no vCenter Server VM/Host rule is created and you need to do it manually.

- Be patient when you build up the appliances as the process will take some time to complete.

- The cluster’s quorum is based on node majority, so you will need at least 2 appliances up and running for the cluster to be up and the underlying services are up respectively.

- In case you’re testing this in you lab, the appliance has a memory reservation of 16GB and if you don’t have enough resources the clustering process will fail and you’ll have to delete the configuration and start-over. To bypass this, you’ll need to unleash you fast/swift clicky clicky skills to edit the settings of the deployed OVF to remove the reservation ;-).

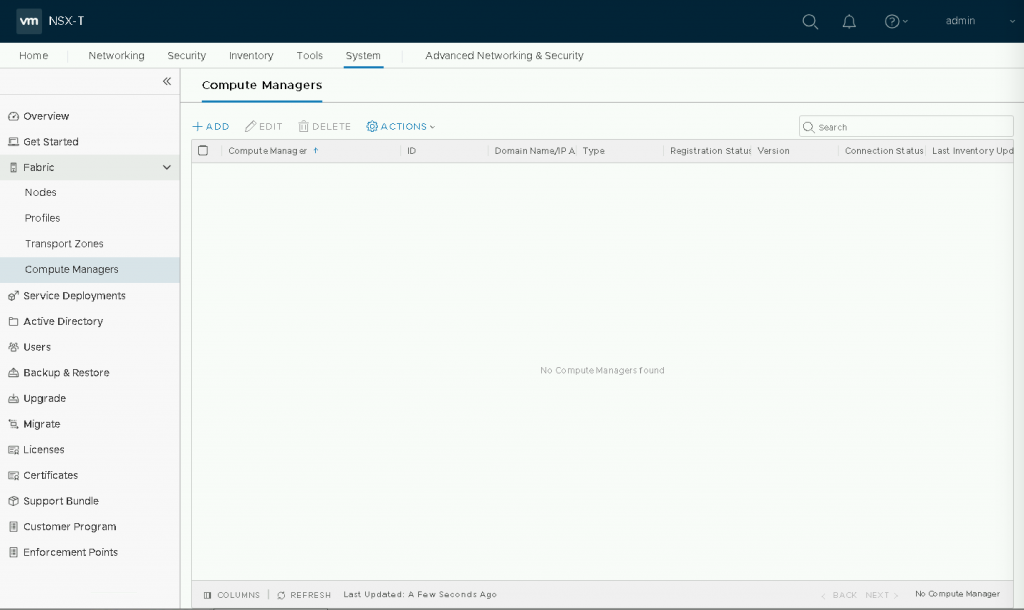

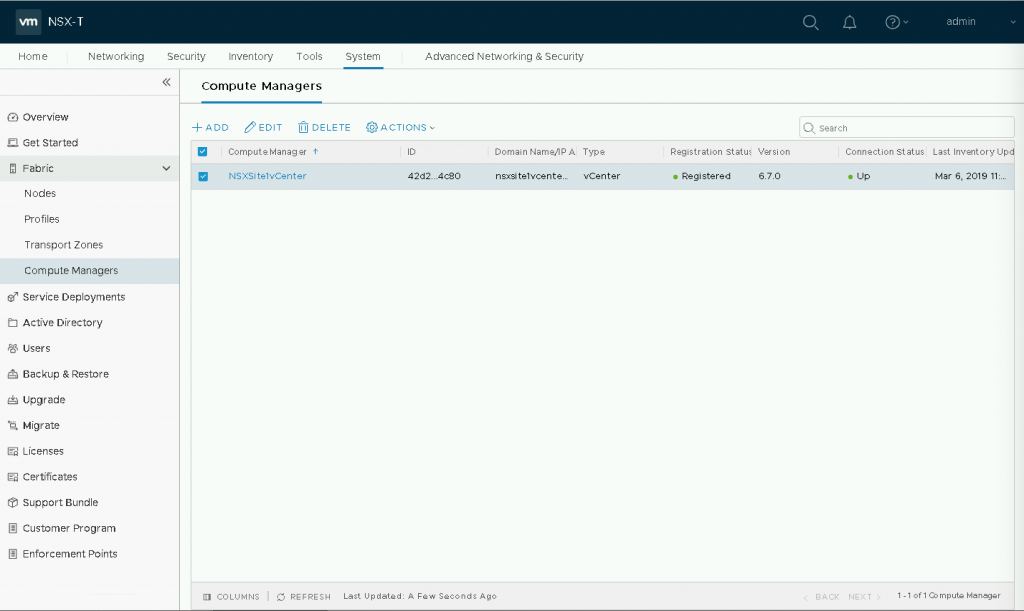

With the above said, let us begin, first you will need to add a Compute manager, and presumably if you have multiple vCenter Servers; this will be your management vCenter Server and we’re only going to add it for the purpose of adding additional NSX-T Nodes.

Once the vCenter Server is added you’ll be looking at this:

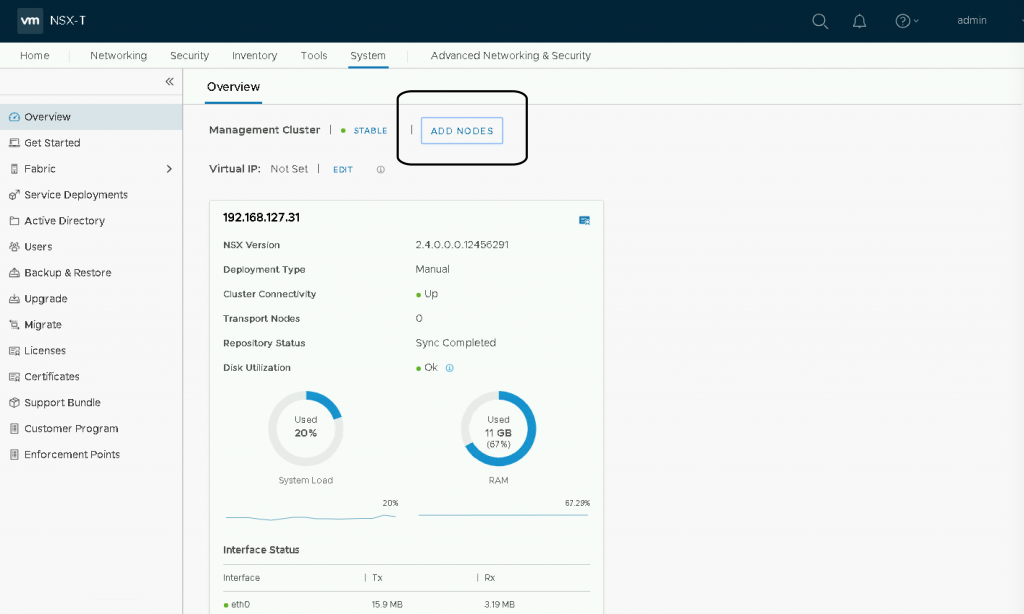

Go back to Overview and click on “ADD NODES“:

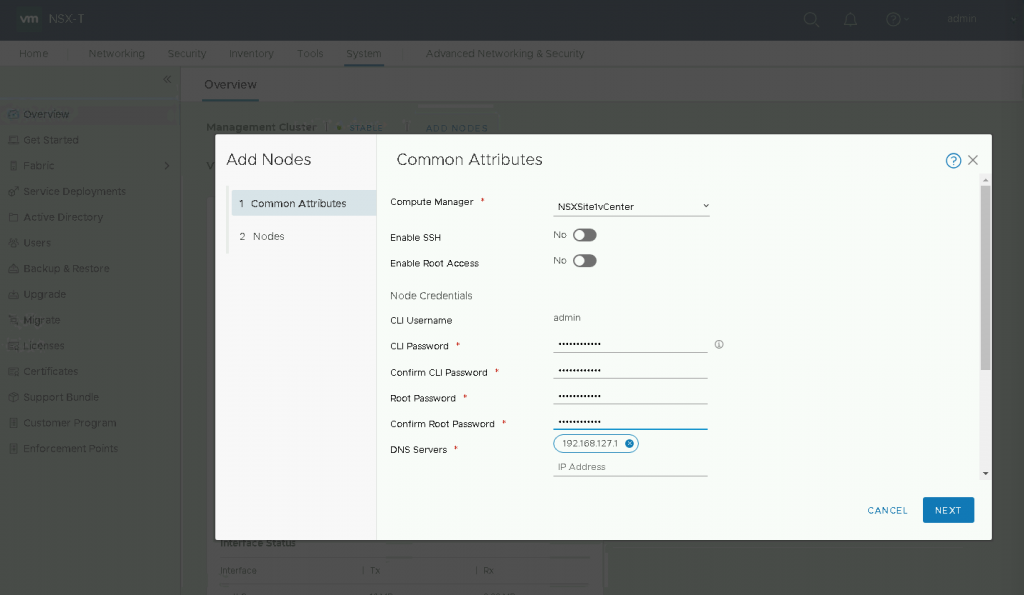

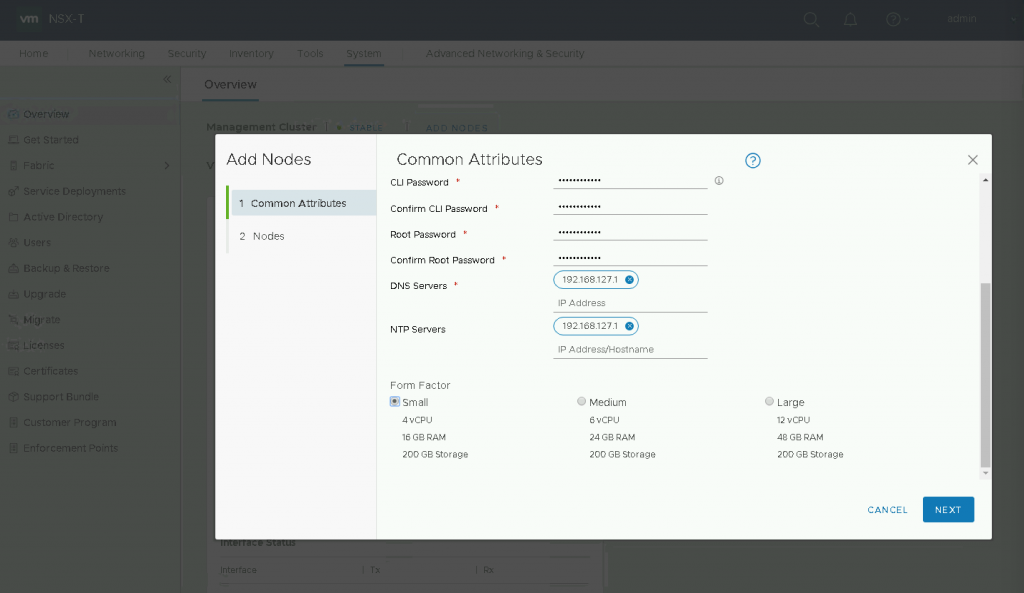

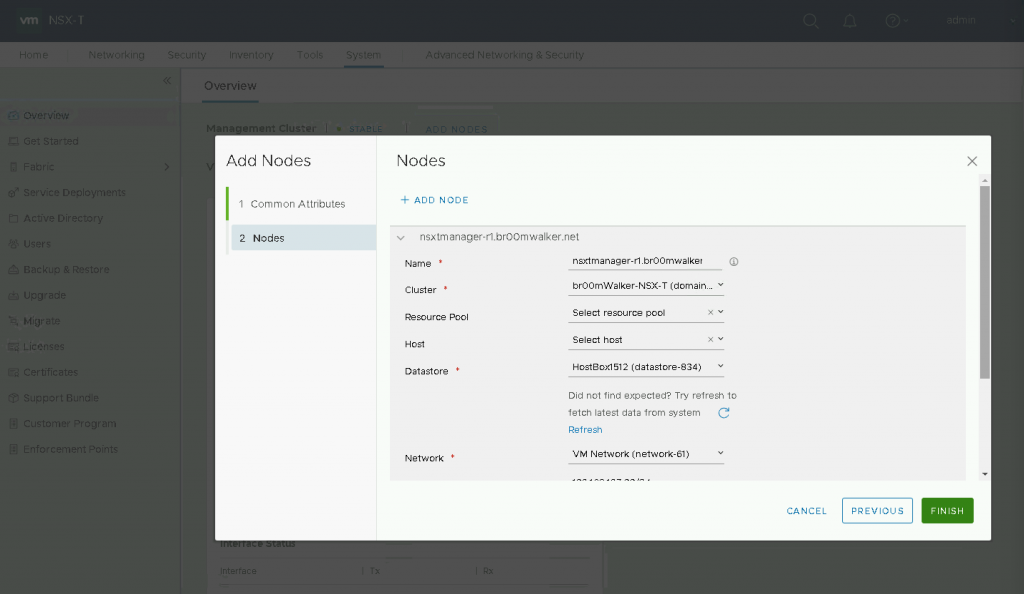

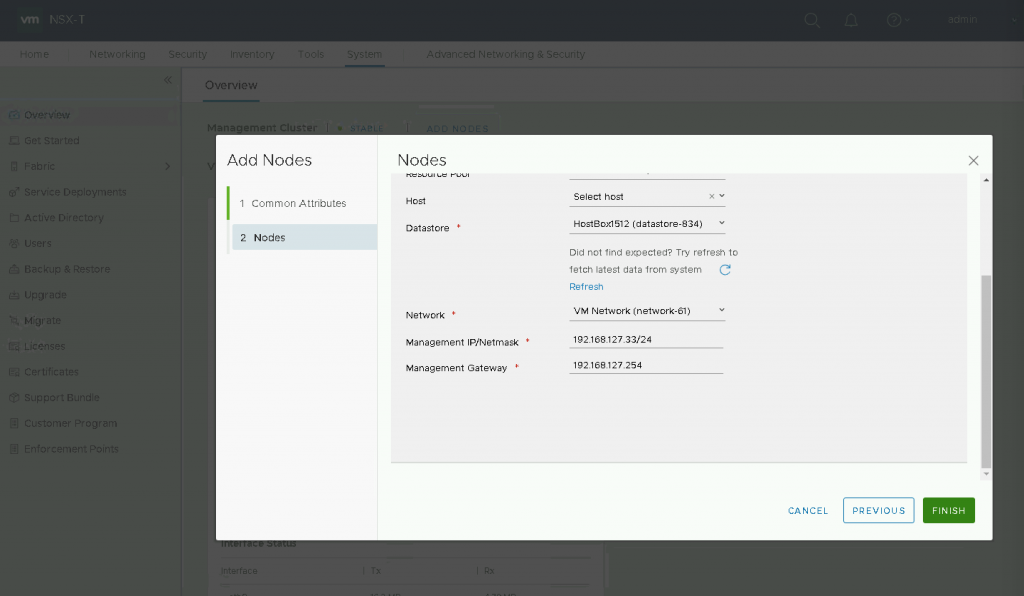

A dialogue opens to to fill in the node information:

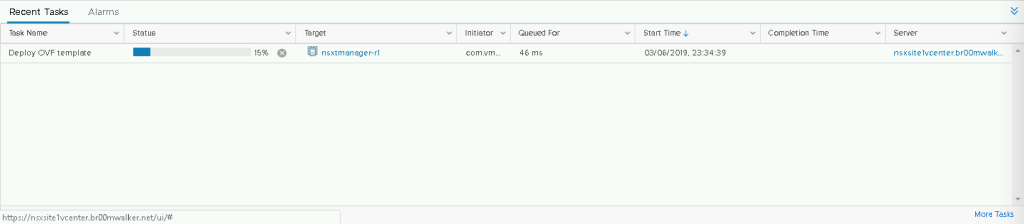

Once you click finish, the new node OVF deployment kicks in and from a vCenter Server perspective, it is similar to the deployment of any appliance:

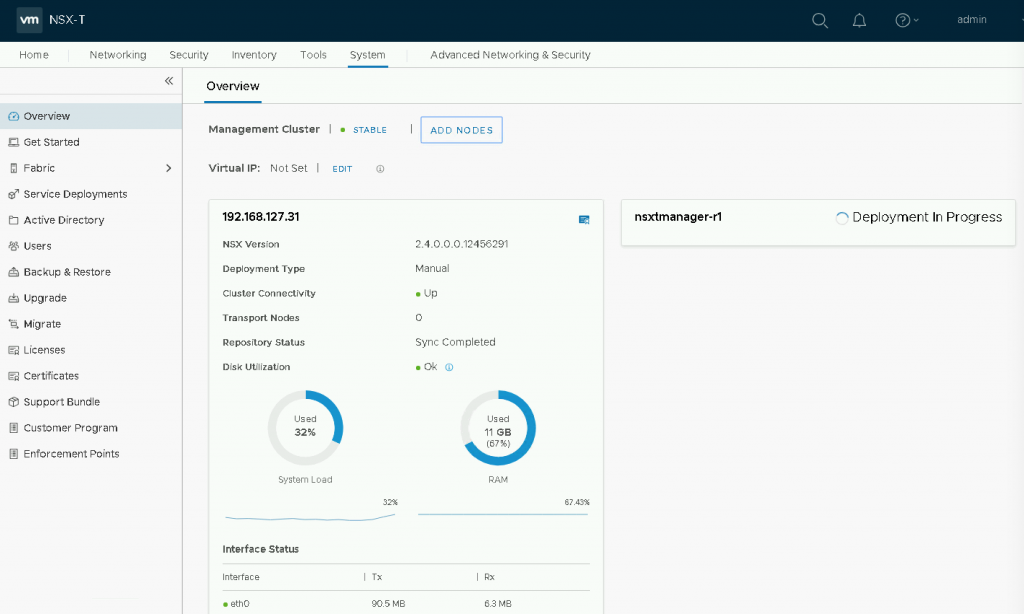

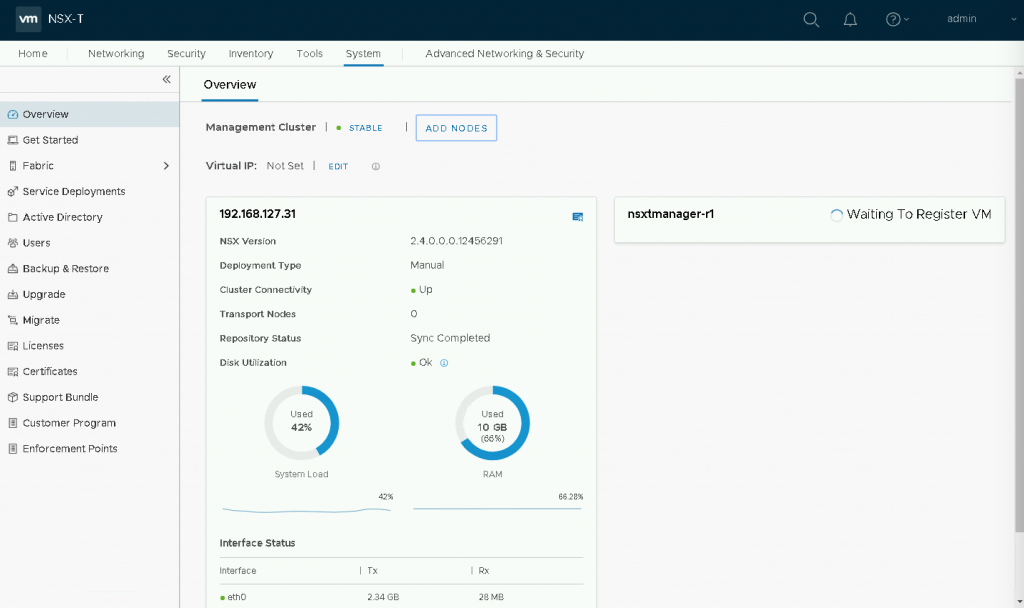

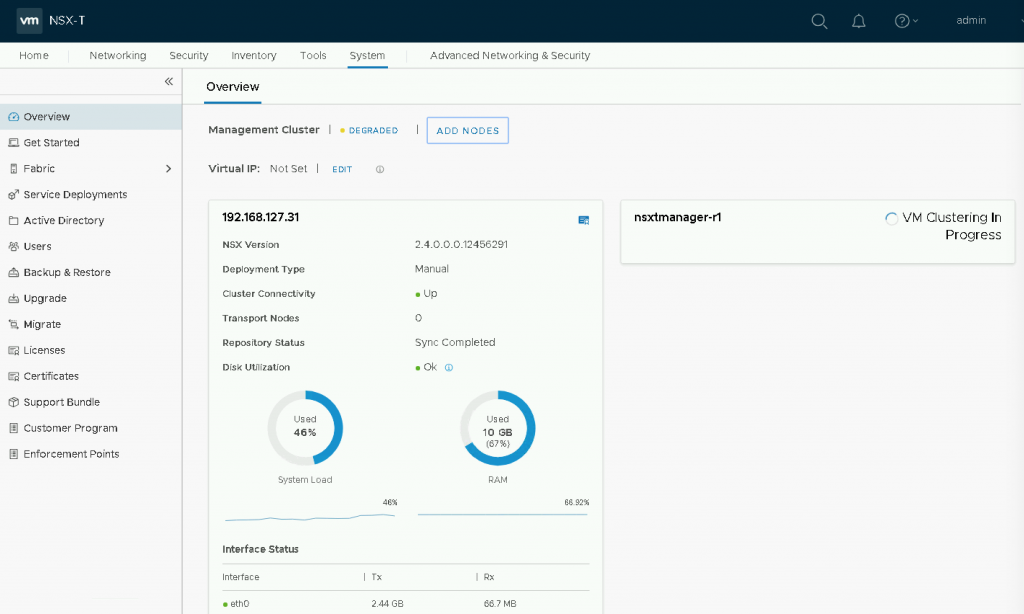

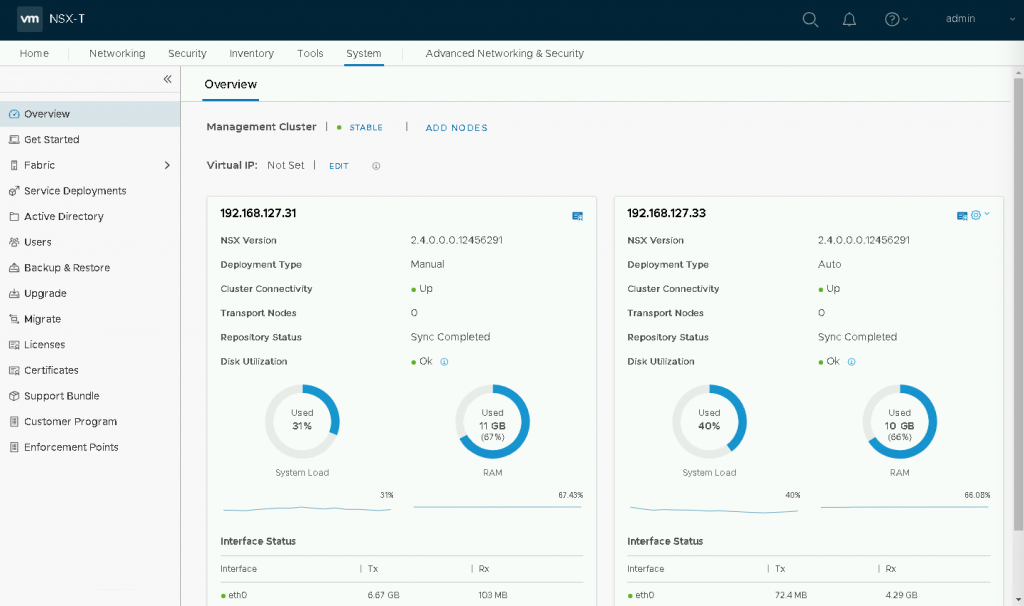

From a NSX-T manager point of view, the deployment will show in progress until the appliance is booted and then the status gets updated based on the cluster join phases:

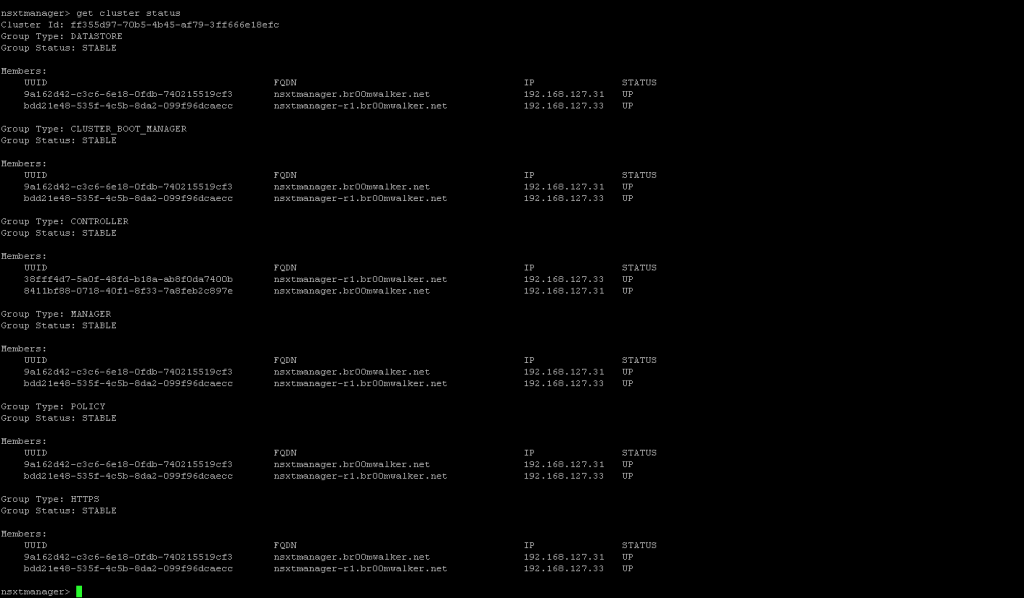

After the appliance is deployed and synced, you can SSH to the NSX-T Manager and check the cluster configuration via the command “get cluster status”:

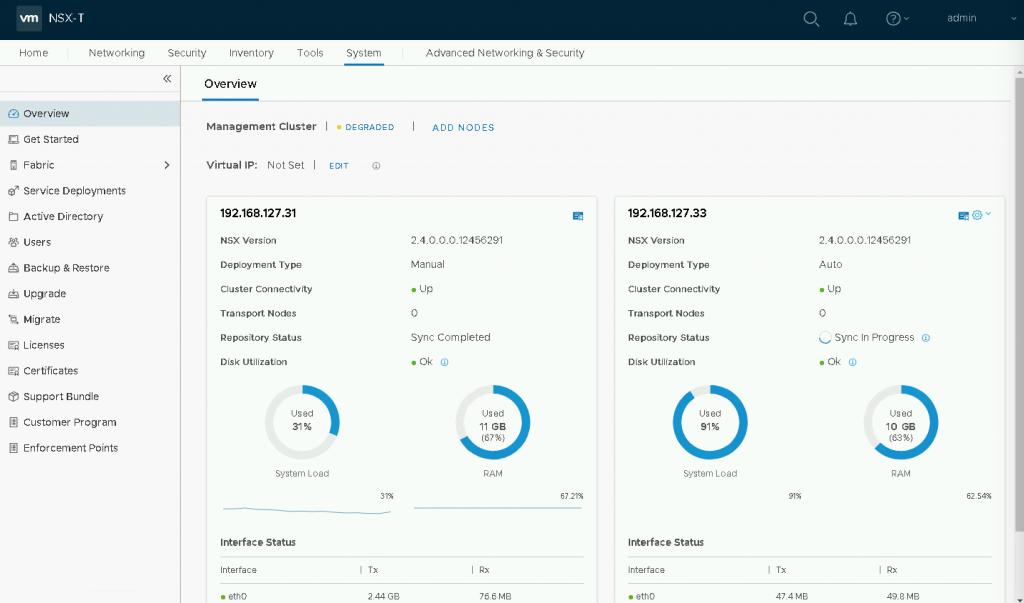

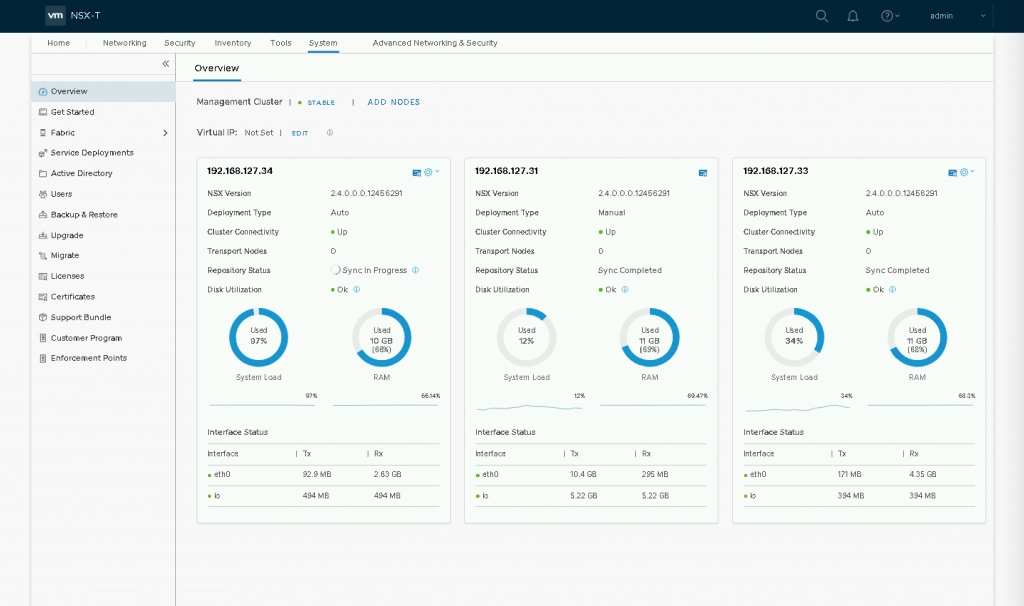

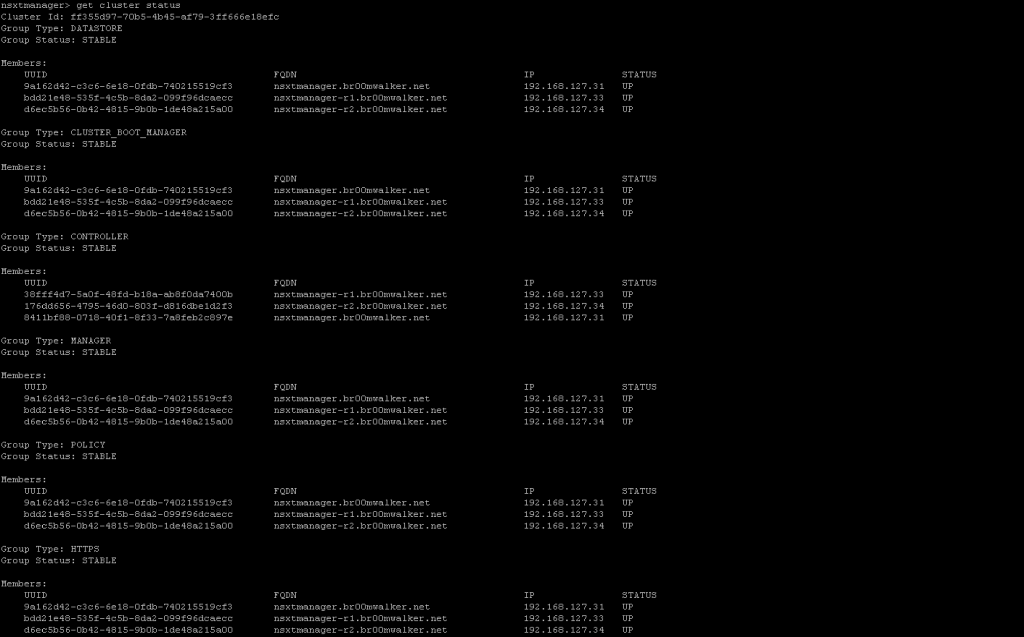

For the cluster formation to complete we will need three appliances to be deployed, following the same method as before, deploy a third appliance and once it is done, all three appliances should up and synchronized:

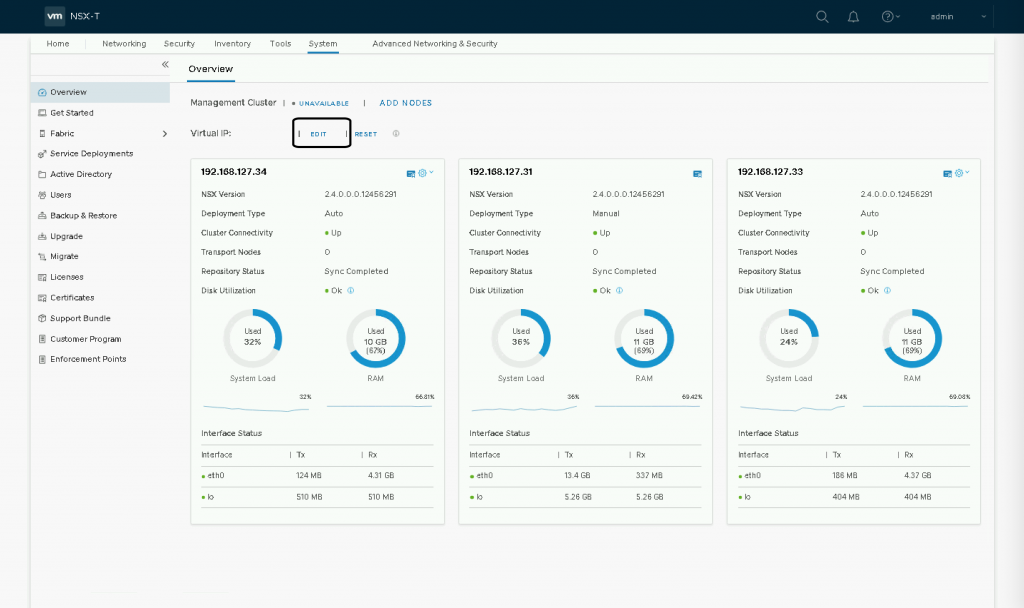

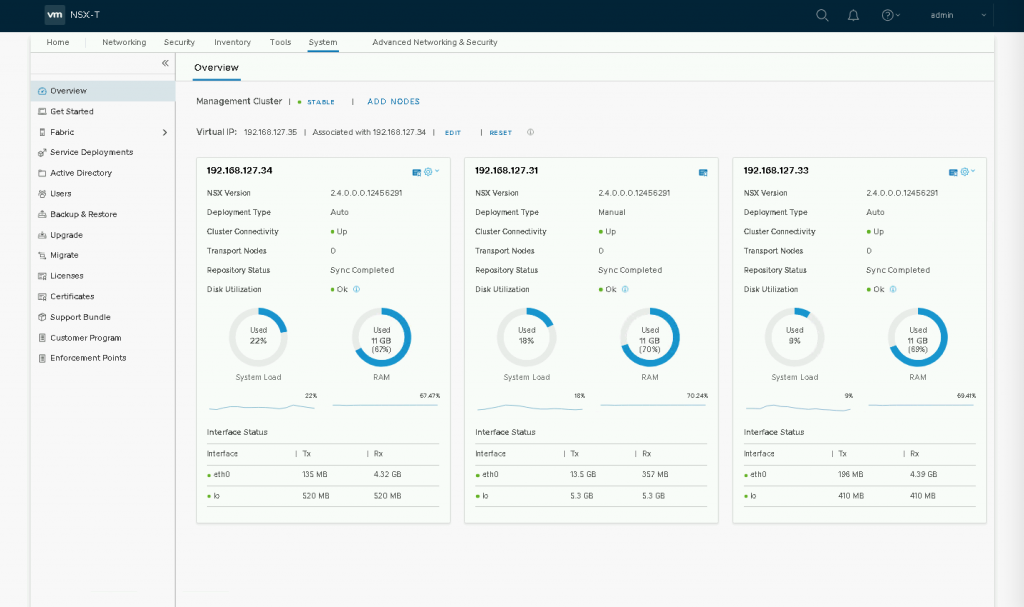

The last thing that needs to be done is to create virtual IP address (VIP) that will be used as a load balancing entry for the cluster. While being in the Overview window, click Edit near the Virtual IP and type in the IP address which should be within the same IP range for the NSX-T Manager’s. The VIP is a sub-interface that is created and is assigned to the master node in the cluster:

When the UI is accessed via the VIP, it will tell you within the dashboard about the appliance that owns is:

There you have it, a simply and elegant cluster with a VIP and all aspects of high-availability :). For my next blog post, I will be doing some tests on the failure scenarios of the appliances and what would happen and what to expect.

Thank you,

(Abdullah)^2

Hey; thanks for the information in so much detailed way, keep posting and updating the readers.