NSX: Cluster is already configured for VXLAN

Among the things I tend to do when preparing to delve into a product and of course it certification is to rip apart my lab in a continuous pace because I tend to break it as much as I can, but this time I didn’t do it myself :( and I didn’t get to enjoy it *sniff*.

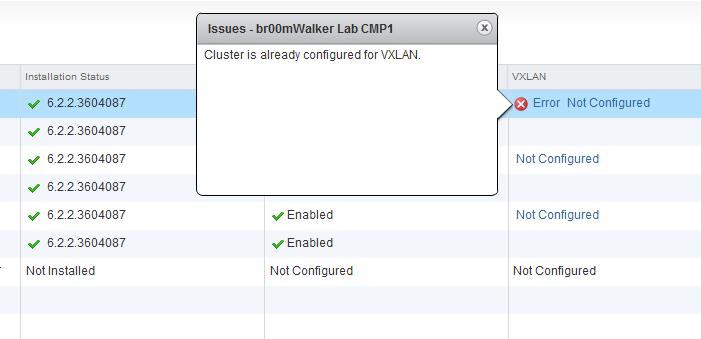

So I powered on my lab to find that there is something wrong with the VXLAN configuration where:

- I noticed that the VTEPs on my hosts were showing problematic.

- I couldn’t ping any VM on a logical switch that is connected to a logical router.

- I couldn’t ping my logical router from my edge.

Where all of the above where working normally at an earlier stage, so here I decided to remove everything and start clean and achieve this I follow this guide as usual and it went smooth.

- I reinstalled the VTEPs successfully.

- But when trying to configure VXLAN here is where the problem started

I tried almost everything to get around this with no luck:

- I double checked that everything has been removed in terms of the previous configuration and the results are the same.

- Changed the VXLAN IP address distribution.

- Tried to see if in the manager’s CLI or controllers CLI something can be done and nothing as well.

- And this is happening on all of my 3 cluster (Compute1,2 and Management).

Finally I took the error’s word for it:

- I re-cleaned up everything.

- Created 3 new clusters and migrated each node to its respective new cluster.

- Went through the process of installing VTEPs and then configured VXLAN successfully.

At the moment everything is working fine and as expected, I believe there is something in the cluster configuration that we should be able to tap in-order to clean this allocation. If someone knows this or know someone who can get us this information please do share it with me so that to update this post.

Thank you,

(Abdullah)^2

I don’t believe it’s in the cluster’s config, I believe it’s stored in the NSX manager’s postgress database that it run’s locally. Unfortunately VMware won’t let us in there at that just yet.

Might be, thank you for the info :-).